Combination Product Industry News & Guidance

Sharing device-related information and wisdom that will help you succeedHow To Write a Human Factors/Usability Engineering Report for FDA Approval

Every combination product or medical device submitted to the FDA requires human factors assessment.

Preparation of your Human Factors/Usability Engineering (HF/UE*) report is one of the final steps taken in the submission process. This final report is required to support your premarket submissions, and it should demonstrate to the Food and Drug Administration (FDA) that you have developed a safe and effective medical device.

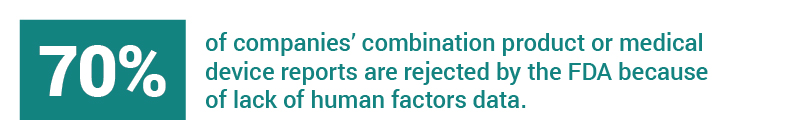

What you don’t want to have happen is for the FDA to reject your report, asking for more data—and yet, this happens to companies regularly (~70% of the time). Companies on restricted budgets such as startups with a “bootstrap” ethos try and do it all—and sometimes miss the mark on the Human Factors/Usability Engineering report. They are then required to go back, conduct more testing and report more data, causing costly and untimely delays.

How can you avoid this potential interruption to the approval process? It often boils down to one word: Storytelling.

We’ll get to why it’s so important in a bit, first let’s look at what some of the more basic requirements are, and why getting reporting right is so important.

What is required in Human Factors/Usability Engineering reporting?

The basics to include in a report are clearly defined on the FDA’s website. This guidance document goes through definitions, an overview of HF/UE, and explains who FDA considers to be device users, and what use environments and user interface mean. FDA has even issued guidance for “high priority” devices that they feel demonstrate a “clear potential for serious harm,” which elevates human factors data as even more important in the agency’s consideration and review activities.

Considering Human Factors/Usability Engineering for medical devices is a critical part of the FDA process. For any medical device, it makes sense to examine, study, and design for how humans interact with a device. Although general usability—how an end user interacts with a device—is important, FDA’s biggest concern is to minimize use errors that could lead to user harm.

The FDA guidance specifically states:

“FDA is primarily concerned that devices are safe and effective for the intended users, uses, and use environments. The goal is to ensure that the device user interface has been designed such that use errors that occur during use of the device that could cause harm or degrade medical treatment are either eliminated or reduced to the extent possible.”

With this in mind, you can begin to see how important it is to use your Human Factors/Usability Engineering report to convey that you’ve explicitly addressed FDA’s concerns. The FDA guidance makes comprehensive recommendations on what to include in a report, and even details how to conduct the HF/UE tasks to obtain the data required to support the claim of device safety and effectiveness.

However, the guidance doesn’t explicitly state how the report content should be best positioned or structured to clearly convey what HF/UE learned during the analysis, design and verification/validation phases. It leaves that up to the company developing the report—and yet, how information is packaged and conveyed can be a critical part of a report’s success or failure.

How can you use reporting to best demonstrate that you’ve investigated and reduced/ eliminated the potential for use errors, especially use errors that could cause harm?

Show, don’t tell.

The phrase “show, don’t tell” is an instruction that virtually every writer hears at some point, and it’s a useful phrase to include here. At its most basic, this instruction directs writers to use the written word to provide concrete details that pull the reader into the piece.

This brings us to observational data, and how storytelling fits well into reporting, and can make a real difference in how your report is received by the FDA.

FDA’s recommendations in the observational data section (section 8.1.5.1) state:

“The human factors validation testing should include observations of participants’ performance of all the critical use scenarios (which include all the critical tasks). The test protocol should describe in advance how test participant use errors and other meaningful use problems were defined, identified, recorded and reported. The protocol should also be designed such that previously unanticipated use errors will be observed and recorded and included in the follow-up interviews with the participants.”

Consider first how observational performance might be recorded. It could be entered into a chart, for instance, with numerical data reflecting the rates of participant use errors. Charts could provide an “at a glance” relaying of key information. This is quantitative data and is very common to include in reports.

The FDA is most interested in understanding the “why” underlying any observed performance challenges or difficulties. Quantitative data can be limited in its potential to provide this context, and this is where having qualitative or subjective opinion data can help to better fill in gaps of understanding.

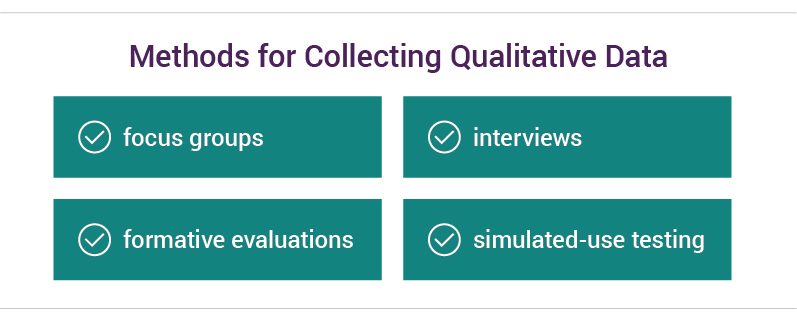

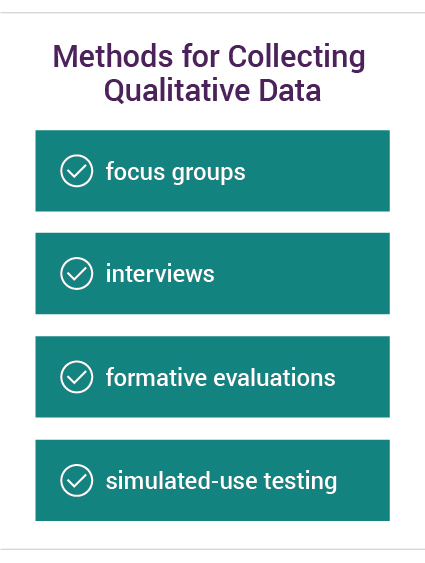

Qualitative data can be collected in a variety of ways, including focus groups or individual interviews that can provide invaluable feedback. Other means of collecting qualitative data include formative evaluations and simulated-use testing, which demonstrate the users’ perceptual, cognitive and action interactions with the device.

Conveying this information when writing the report is where storytelling comes in.

Consider the two following (fictitious) statements describing an injectable medication device.

“During testing, 36% of the device users were unable to dispense the full and correct dosage required. As a result of this finding, the dispensing system was redesigned, and subsequent retesting indicated that 100% of device users were successful using the redesigned components.”

(versus)

“During testing, we observed that the button used to dispense the dosage required was too small for a user with arthritic hands to activate fully. Nearly 36% of device users experienced this problem. The dispensing system was redesigned to incorporate a larger button, activated with a soft-touch device. This caused the injection device to deploy accidentally, such as when kept in a purse or pocket, wasting medication.

A subsequent redesign included an accompanying carrying case with a secure but easily opened clasp. This kept the injection secured and minimized the potential for accidental deployment. The case can easily be opened by users with arthritis and follow up testing showed that all users who previously struggled with dispensing can now do so with no issues.”

Storytelling makes context easier to grasp.

Both statements contain the key information that users initially struggled to dispense medication correctly (a critical task), and that a redesign solved the problem.

However, it is the second statement—the one with more detail—that provides FDA with additional information as to what was wrong and how it was fixed to address that particular concern.

Here is all of the additional context provided by the second example:

- It provides more detail about the users, including that a portion of the anticipated population is likely to also suffer from arthritis, which is a comorbidity hazard.

- It details what the exact problem was, e.g., that the button was too small, causing a critical task failure.

- It explains how this problem was addressed through the development of a larger, soft-touch button.

- It then provides additional detail on how the redesign itself caused problems.

- It then explains how those follow-on problems were addressed through the development of a carrying case.

- And finally, it shows how the solution also required consideration of arthritic users (the case had to be easy to open, yet secure).

It’s likely that all of this additional information is also contained in the report somewhere else in some form. However, having it conveyed through the short narrative paragraphs accomplishes an important goal: it puts into context what the testing entailed, what was learned, and how problems were solved—and it does so quickly, in one place.

Think of storytelling in your Human Factors/Usability Engineering reporting as a method to make it easier for the FDA to immediately grasp how you approached potential HF/UE problems when developing your medical device.

If introducing storytelling to your Human Factors/Usability Engineering reports seems like a good idea but you don’t know where to start, we can help. If you’d like to learn more about Human Factors Engineering, download our overview. Or, if you’re ready to explore how Suttons Creek can help you with your plans for FDA reporting, contact us for an assessment.

*A brief note about the acronyms used in this post. Previously, the acronym generally used to abbreviate “Human Factors Engineering/Usability Engineering” was HFE/UE. However, the FDA is now using HF/UE, and because of the switch both versions of the acronym can be found on the FDA website and in guidance documents. We have elected to use the newer version in this post.